Context Aware Chat API

import logging

from fastapi import FastAPI

from fastapi.responses import RedirectResponse

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

app = FastAPI()

@app.get("/", include_in_schema=False)

def root():

return RedirectResponse(url="/docs")

from chat_api import router as chat_router

app.include_router(chat_router, prefix="/api")

# Do not remove the main function while updating the app.

if __name__ == "__main__":

import uvicornFrequently Asked Questions

What are the main business applications for this Context Aware Chat API?

The Context Aware Chat API offers several business applications: - Customer Support: Implement an AI-powered chatbot to handle customer inquiries 24/

How can the Context Aware Chat API improve user engagement for businesses?

The Context Aware Chat API can significantly enhance user engagement by: - Providing personalized interactions based on conversation history. - Offering quick and accurate responses to user queries. - Maintaining context throughout the conversation, reducing user frustration. - Enabling 24/7 availability for customer interactions. - Scaling to handle multiple users simultaneously without compromising quality.

By implementing this API, businesses can offer a more interactive and responsive user experience, potentially increasing customer satisfaction and retention.

What security measures are implemented in the Context Aware Chat API?

The Context Aware Chat API incorporates several security measures: - Session management using unique session IDs. - Content Security Policy (CSP) headers to prevent cross-site scripting attacks. - X-Content-Type-Options header to prevent MIME type sniffing. - X-Frame-Options header to protect against clickjacking. - HTTP Strict Transport Security (HSTS) to enforce secure connections.

These measures help protect user data and prevent common web vulnerabilities, making the Context Aware Chat API a secure choice for businesses handling sensitive customer interactions.

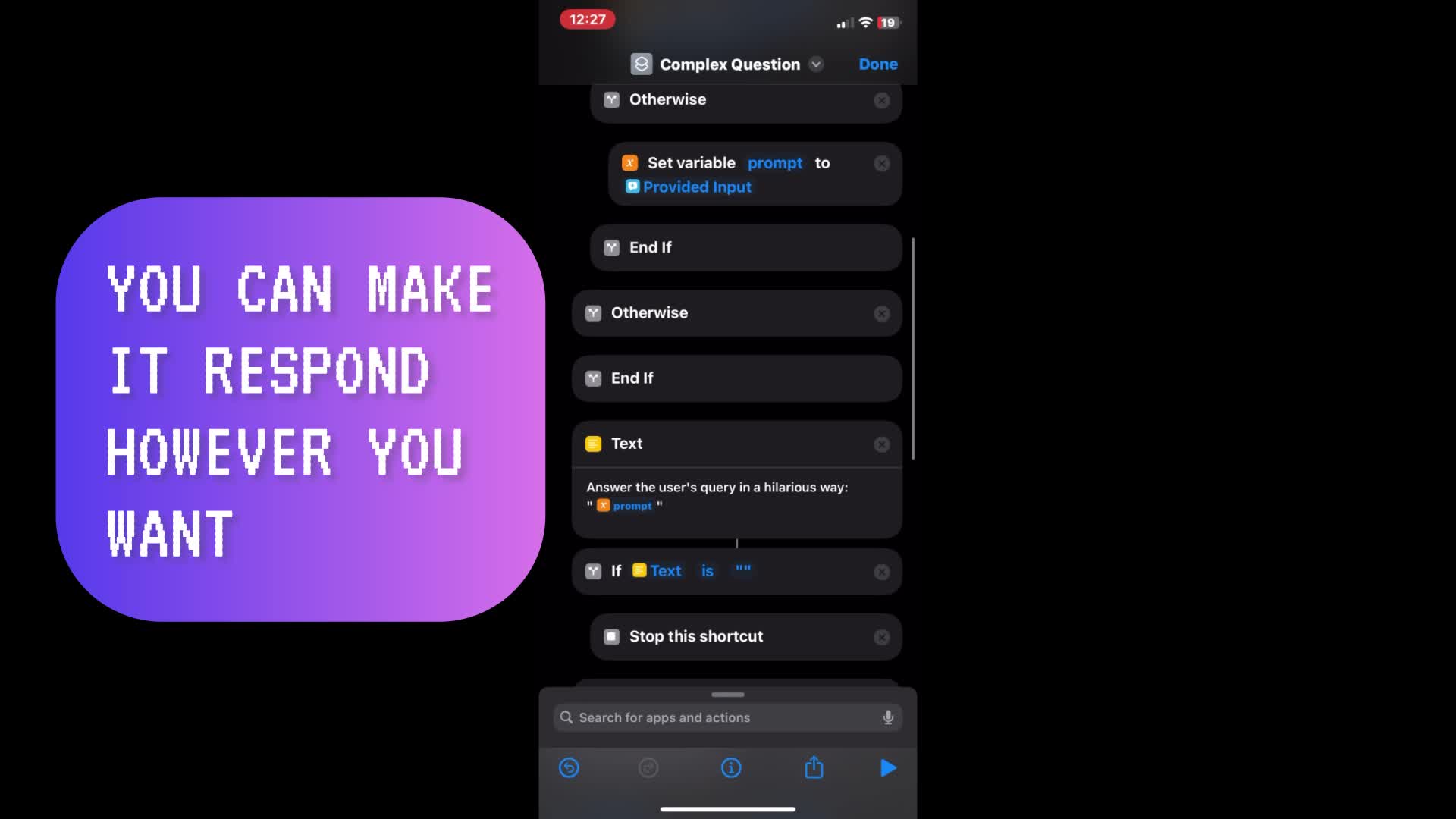

How can I customize the language model used in the Context Aware Chat API?

The Context Aware Chat API uses the llm_prompt function from the abilities module to generate responses. To customize the language model, you can modify the llm_prompt function call in the chat_with_llm function within chat_api.py. Here's an example of how you might change the model and its parameters:

python

llm_response = llm_prompt(prompt=context, model="gpt-3.5-turbo", temperature=0.7)

In this example, we've changed the model to "gpt-3.5-turbo" and increased the temperature to 0.7 for more creative responses. You can adjust these parameters based on your specific requirements and the capabilities of your chosen language model.

How can I extend the Context Aware Chat API to include additional endpoints?

To add new endpoints to the Context Aware Chat API, you can create new route handlers in separate files and include them in the main.py file. Here's an example of how to add a new GET endpoint:

Created: | Last Updated:

Introduction to the Context Aware Chat API Template

Welcome to the Context Aware Chat API Template! This template is designed to help you build a simple chat API that interacts with a large language model (LLM) to provide context-aware responses. Ideal for customer support or interactive applications, this API keeps track of the conversation history to maintain context and deliver more relevant replies.

Getting Started

To begin using this template, simply click on "Start with this Template" on the Lazy platform. This will pre-populate the code in the Lazy Builder interface, so you won't need to copy, paste, or delete any code manually.

Test: Deploying the App

Once you have started with the template, press the "Test" button to deploy the app. The Lazy platform handles all deployment aspects, so you don't need to worry about installing libraries or setting up your environment. The deployment process will launch the Lazy CLI, and you will be prompted for any required user input only after using the Test button.

Using the App

After deployment, Lazy will provide you with a dedicated server link to use the API. Since this template uses FastAPI, you will also receive a link to the API documentation. You can interact with the API by sending POST requests to the "/api/chat" endpoint with a JSON payload containing your message. Here's a sample request you might send:

{

"message": "Hello, can you help me with my issue?"

}

And here's an example of the response you might receive:

{

"session_id": "a unique session identifier",

"response": "Of course, I'm here to help. What seems to be the problem?"

}

The API will maintain a history of the conversation to ensure that the context is preserved throughout the interaction.

Integrating the App

If you wish to integrate this chat API into an existing service or frontend, you will need to send HTTP requests to the server link provided by Lazy. You can use the API endpoints to send and receive messages programmatically from your application.

For example, if you're building a web application, you can use JavaScript to make AJAX calls to the chat API, sending user messages and displaying the API's responses in your application's UI.

Remember, no additional setup is required for the 'abilities' module, as it is a built-in module within the Lazy platform.

By following these steps, you can quickly set up and integrate the Context Aware Chat API into your application, providing an interactive and engaging user experience.

Here are 5 key business benefits for this template:

Template Benefits

-

Scalable Customer Support: This chat API can be integrated into customer service platforms, providing AI-powered support that can handle multiple customer queries simultaneously, reducing wait times and improving customer satisfaction.

-

Personalized User Experience: The session-based chat history allows for context-aware conversations, enabling more personalized and relevant responses to user queries, which can enhance user engagement and loyalty.

-

Cost-Effective Knowledge Management: By leveraging a large language model, businesses can create a centralized knowledge base that can be easily accessed and utilized across various departments, reducing the need for extensive manual documentation and training.

-

Rapid Prototyping for AI Applications: The template provides a solid foundation for quickly developing and testing AI-powered chat applications, allowing businesses to iterate on new ideas and concepts with minimal setup time.

-

Enhanced Security and Compliance: With built-in security headers and cookie management, the template helps businesses maintain a secure communication channel, which is crucial for handling sensitive customer information and meeting regulatory requirements in various industries.